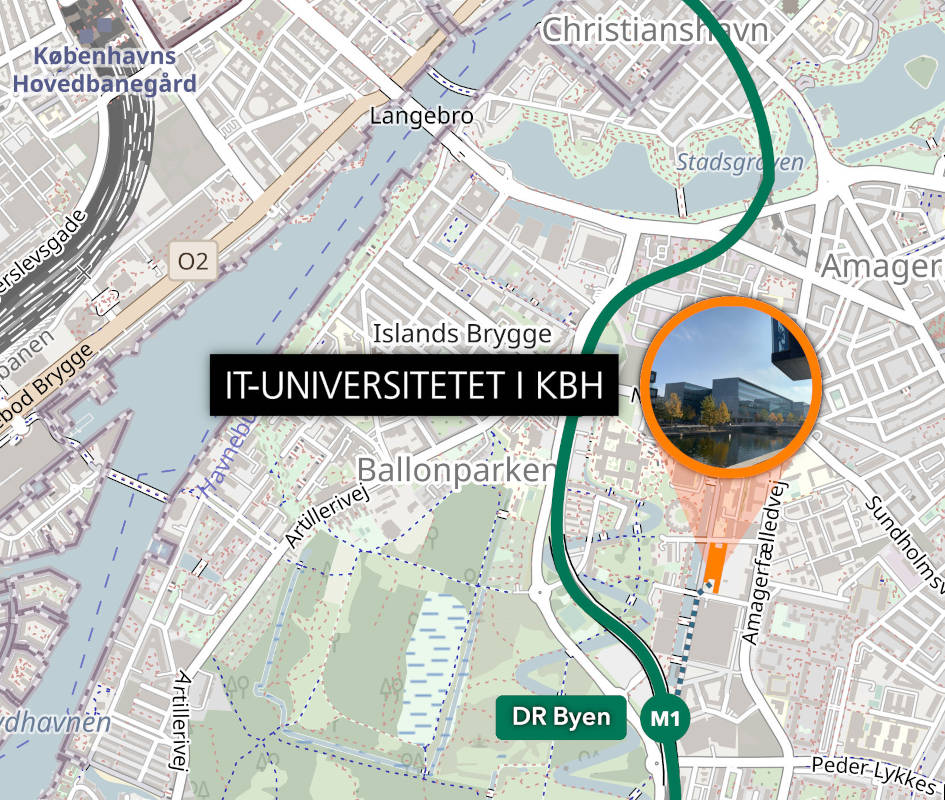

The Multi* Workshop on Linguistic Variation is planned for the 5th and 6th October, 2023 and will take place at the IT University of Copenhagen.

The sessions are categorized along a broad range of language communities (e.g., high/mid/low-resource, multilingualism, atypical domains, dialects), with the goal of aligning NLP researchers and practitioners with the language communities they serve, and discussing how to get there.

How to Attend

All sessions will take place on the campus of the IT University of Copenhagen at Rued Langgaards Vej 7, 2300 København S. Attendance is free of charge—just register here.

As a parallel option, we also invite virtual participants to join via Zoom.

Access

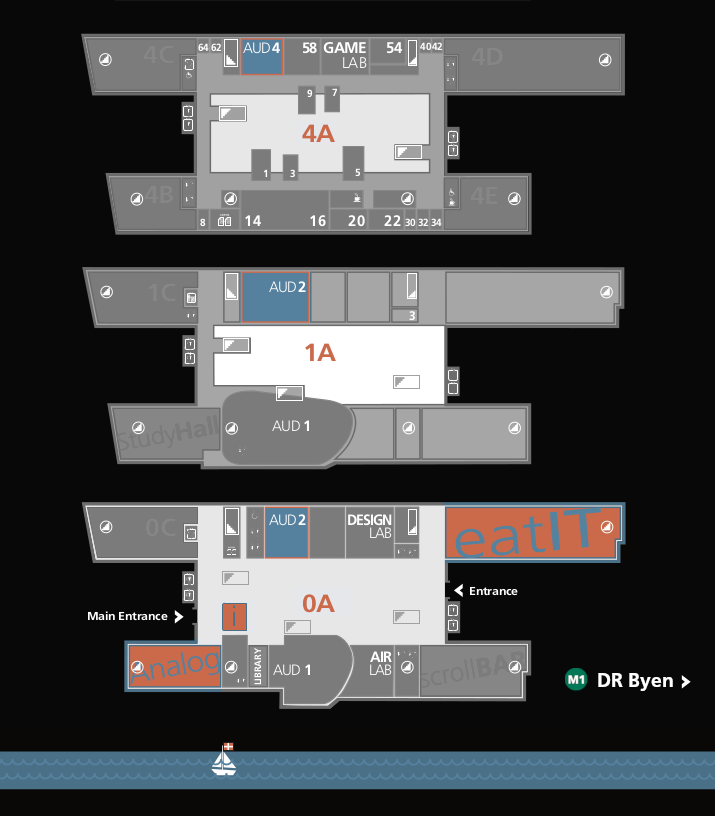

Instructions on how to reach ITU can be found here. We recommend taking metro line M1 to DR Byen, and walking 5 minutes north along the canal to arrive at the main campus.

Auditorium 2 is accessible from both the ground floor as well as the first floor. Auditorium 4 can be found on the fourth floor. For lunch, our canteen "eatIT" provides a pay-by-weight buffet with many vegan options, while Café Analog provides excellent coffee prepared by student and staff volunteers with the best quality-price ratio in the whole of Copenhagen.

Program

Day 1 Day 2Day 1

5th October, 2023 in Auditorium 2.

Opening Session

Organizers: Welcome and Introduction

14:00 – 14:15 in AUD 2

Session 1

Finding Local Minima in a Maximum Resource Language

14:15 – 16:00 in AUD 2

Dirk Hovy: How Solved is NLP?

Bocconi University

Large Language Models have made impressive contributions to NLP. We can now translate, summarize, and generate text at human and super-human levels. So, are we done with NLP? In this talk, I will look at some of the (sizable) remaining pockets of unresolved questions and issues, even in high-resource languages like English. It turns out that there is still plenty to do with language of and for children and non-standard speakers, the safety and harmlessness of models, and the application to non-standard tasks. I will suggest some aspects that can make for interesting future directions and enjoyable puzzling to make NLP fairer and (even) more performative.

Elisa Bassignana: Current Challenges in Information Extraction

IT University of Copenhagen

With the increase of digitized data and extensive access to it, the task of extracting relevant information with respect to a given query has become crucial. The variety of applications of the tasks related to Information Extraction, together with the impossibility of annotating data for every individual setup, require models to be robust to data shifts. In this talk I will present the findings of my PhD project with respect to one of the most important challenges of Information Extraction: The ability of models to perform in unseen scenarios (i.e., unknown text domains and unknown queries). Specifically, I will dive deep into the challenges of cross-domain Relation Extraction.

Rob van der Goot: Overcoming Challenging Setups in NLP through Normalization and Multi-task Learning

IT University of Copenhagen

This talk will consist of three parts, aligned to the focus of Rob's research in 3 parts of his career. 1. Lexical normalization and its downstream effect on syntactic tasks: ranging from benchmark creation to SOTA lexical normalization and finally the usefullness of normalization 2. Multi-task learning for adaptation in challenging setups. I will cover several studies in a variety of setups that aim to shed some light on the beneficiality of auxiliary tasks 3. Back to the basics: can language models solve tasks like word segmentation and language identification, or are there better approaches? what are the remaining challenges for these tasks?

16:00 – 16:15Coffee Break

Session 2

Tips from the Locals

16:15 – 18:00 in AUD 2

Esben Opstrup Hansen: Using NLP to Improve Labour Market Policy

Danish Agency for Labour Market and Recruitment

STAR (Styrelsen for Arbejdsmarked og Rekruttering) is developing a skills tools. The project is meant to generate knowledge of which skills and tasks are in demand. The knowledge is then meant to be used to plan re-education of unemployed, and for caseworkers in Jobcenters to guide unemployed in search for jobs, and where they can use their skills. The project uses job vacancies as the data source. It aims to identify each individual unit of expression of skill or task in job vacancies. Then group these identified units into smaller and larger groups, where the smallest groups contains concepts of the same meaning, to larger groups of similar concepts.

Mike Zhang: Natural Language Processing for Job Market Understanding

IT University of Copenhagen

We have a pressing technological need for systems that can efficiently extract occupational skills for the broader job market. This demand is particularly heightened in the era of Industry 4.0 (Heiner et al., 2015) and the emergence of cutting-edge large language models (Zarifhonarvar, 2023). It is necessary that we swiftly adapt to the ever-evolving landscape of qualifications. In this presentation, I will introduce two contributions where we have created open-source datasets and language models specifically designed to enhance Natural Language Processing for Human Resources applications, with a focus on both English and Danish. Lastly, I will briefly delve into the challenges we face when harnessing these large language models to address the demands of the labor market.

Toine Bogers & Quichi Li: The Jobmatch Project: Supporting Recruiters through Algorithmic Hiring

IT University of Copenhagen

In my talk I will introduce the Jobmatch project, in which we develop algorithmic solution to support the recruiters of Jobindex, one of Scandinavia's largest job portals, in identifying relevant candidates for newly published job ads. I will describe our work on building better algorithms for candidate recommendation as well as work on automatically generating explanations for why a candidate was recommended a specific job.

Day 2

6th October, 2023 in Auditorium 4 (10:00–12:00) and Auditorium 2 (13:30–17:30).

Opening Session

Organizers: Welcome Back

10:00 – 10:15 in AUD 4

Session 3

Embracing Regional Variety

10:15 – 12:00 in AUD 4

Alan Ramponi: When NLP Meets Language Varieties of Italy: Challenges and Opportunities

Fondazione Bruno Kessler

Italy is one of the most linguistically-diverse countries in Europe: besides Standard Italian, a large number of local languages, their dialects, and regional varieties of Italian are spoken across the country. The natural language processing (NLP) community has recently begun to include language varieties of Italy in its repertoire; however, most work implicitly assumes that language varieties of Italy are interchangeable with each other and with standardized languages in terms of language functions and technological needs. In this talk, I will discuss the main challenges of the default NLP approach when dealing with such varieties, presenting opportunities for locally-meaningful work and alternative directions for NLP in under-explored areas of research.

Barbara Plank: Towards Inclusive & Human-facing Natural Language Processing

University of Munich / IT University of Copenhagen

Despite the recent success of Natural Language Processing (NLP), driven by advances in large language models (LLMs), there are many challenges ahead to make NLP more human-facing and inclusive. For instance, low-resource languages, non-standard data and dialects pose particular challenges, due to the high variability in language paired with low availability of data. Moreover, while language varies along many dimensions, evaluation today largely focuses data assumed to be `flawless'. In this talk I will survey some of the challenges, and outline potential solutions, discussing work on NLP for dialects and data-centric NLP, which includes learning in light of human label variation.

Noëmi Aepli: Evaluating Dialect Generation

University of Zürich

In order to make meaningful advancements in NLP, it is crucial that we maintain a keen awareness of the limitations inherent in the evaluation metrics we employ. We evaluate the robustness of metrics to non-standardized dialects, i.e., their ability to handle spelling differences in language varieties lacking standardized orthography. To explore this, we compiled a dataset of human translations and human judgments for automatic machine translations from English into two Swiss German dialects. Our findings indicate that current metrics struggle to reliably evaluate Swiss German text generation outputs, particularly at the segment level. We propose initial design adaptations aimed at enhancing robustness when dealing with non-standardized dialects, although there remains much room for further improvement.

12:00 – 13:30Lunch Break

Session 4

Embracing Local Variety...with What Resources?

13:30 – 15:15 in AUD 2

David Sasu: Exploiting Speech Prosody to Improve Spoken Language Understanding for Low-resource African Languages

IT University of Copenhagen

Spoken Language Understanding for low-resource languages is still relatively under-developed, since for most of these under-represented languages, the computational models for performing common spoken language understanding tasks do not exist. This is primarily due to the fact that the requisite forms of data needed for the construction of such models are either non-existent or extremely difficult to obtain. However, even if the data needed for the creation of Spoken Language Understanding systems for low-resource languages could be made readily available, these systems are typically designed using only textual information hence ignoring all of the rich information that is present within the corresponding audio representations of the textual data which may be useful in improving the performance of these systems since most of these low-resource languages, especially African Languages, are tonal in nature. This talk would explore how speech prosodic information could be extracted and applied to play a key role improving spoken language understanding systems for these languages.

Frank Tsiwah: NLP for African Languages: Challenges and Opportunities

University of Groningen

Although the African continent houses over 2,000 spoken languages (approximately a third of the 7000 languages spoken globally) that are linguistically diverse, these languages are highly underrepresented in existing natural language processing (NLP) research, datasets, and technologies. Even the few major languages from key African regions that do find representation in NLP face the problem of scarce data availability, resulting in subpar performance of such systems. In this talk, I will be discussing the present state of affairs of NLP for African languages, some challenges, but also some opportunities that we can leverage to advance speech and language technologies and research for African languages.

Heather Lent: Lessons from CreoleVal: Dataset Creation with Community-in-the-Loop

Aalborg University

Creoles are a diverse yet marginalized group of languages, despite a large population of speakers globally (in the hundreds of millions). This talk will present actionable and practical lessons gleaned from CreoleVal: a large-scale effort to create multilingual multitask benchmarks for Creoles, conducted in collaboration with Creole-speaking communities and NLP researchers from across ten universities. Particularly, these lessons will touch upon fostering collaborations with the relevant communities, as well as effective strategies for creating high-quality datasets in the face of data scarcity.

Tim Baldwin

Mohamed bin Zayed University of Artificial Intelligence

15:15 – 15:45Coffee Break

Session 5

What Can We Learn from Each Other?

15:45 – 17:15 in AUD 2

Joakim Nivre: Ten Years of Universal Dependencies

Uppsala University / Research Institutes of Sweden

Universal Dependencies (UD) is a project developing cross-linguistically consistent treebank annotation for many languages, with the goal of facilitating multilingual parser development, cross-lingual learning, and parsing research from a language typology perspective. Since UD was launched almost ten years ago, it has grown into a large community effort involving over 500 researchers around the world, together producing treebanks for 141 languages and enabling new research directions in both NLP and linguistics. In this talk, I will briefly review the history and development of UD and discuss challenges that we need to face when bringing UD into the future.

Jörg Tiedemann: The Blessings and Curse of Multilinguality in NLP

University of Helsinki

In our group, we focus on the development of language technology that covers a large range of linguistic diversity. Our main focus is currently on the development of neural translation models and multilingual representations that can be learned from massively parallel data sets. In my talk, I will briefly introduce the OPUS eco-system with its resources and tools that we provide to the research community. Lesser resouced languages are particularly interesting as modern data-driven NLP faces difficult challenges when training resources are scarce. There are exciting areas of knowledge transfer and multiingual NLP that enable progress in this area. I will briefly mention work we do in our group.

Max Müller-Eberstein: What We Share—A Language Model's Perspective

IT University of Copenhagen

Languages across the globe exhibit an incredible amount of diversity, yet also naturally share certain characteristics. Language models, by approximating distributions over large corpora of multi-lingual texts, learn latent spaces which allow us to compare distributional shifts across languages. This in turn enables data-driven, cross-lingual analyses of what our languages share and to which degree. In this talk, we explore how to measure these similarities using weakly-supervised methods, subspace probing and spectral frequency analysis. These methods allow us to uncover surprising cross-lingual overlaps, which help identify suitable languages for transfer learning in specialized low-resource scenarios.

Closing Session

Organizers: Recap and Goodbye

17:15 – 17:30

17:30Ice Cream Social

Organization

This workshop is kindly supported by DFF grant 9131-00019B (MultiSkill) and DFF grant 9063-00077B (MultiVaLUe).